The Power of Generative UI with Google’s GenUI SDK for Flutter

A Dynamically Visual and Infinitely Personalized Approach to Driving Customer Behaviors

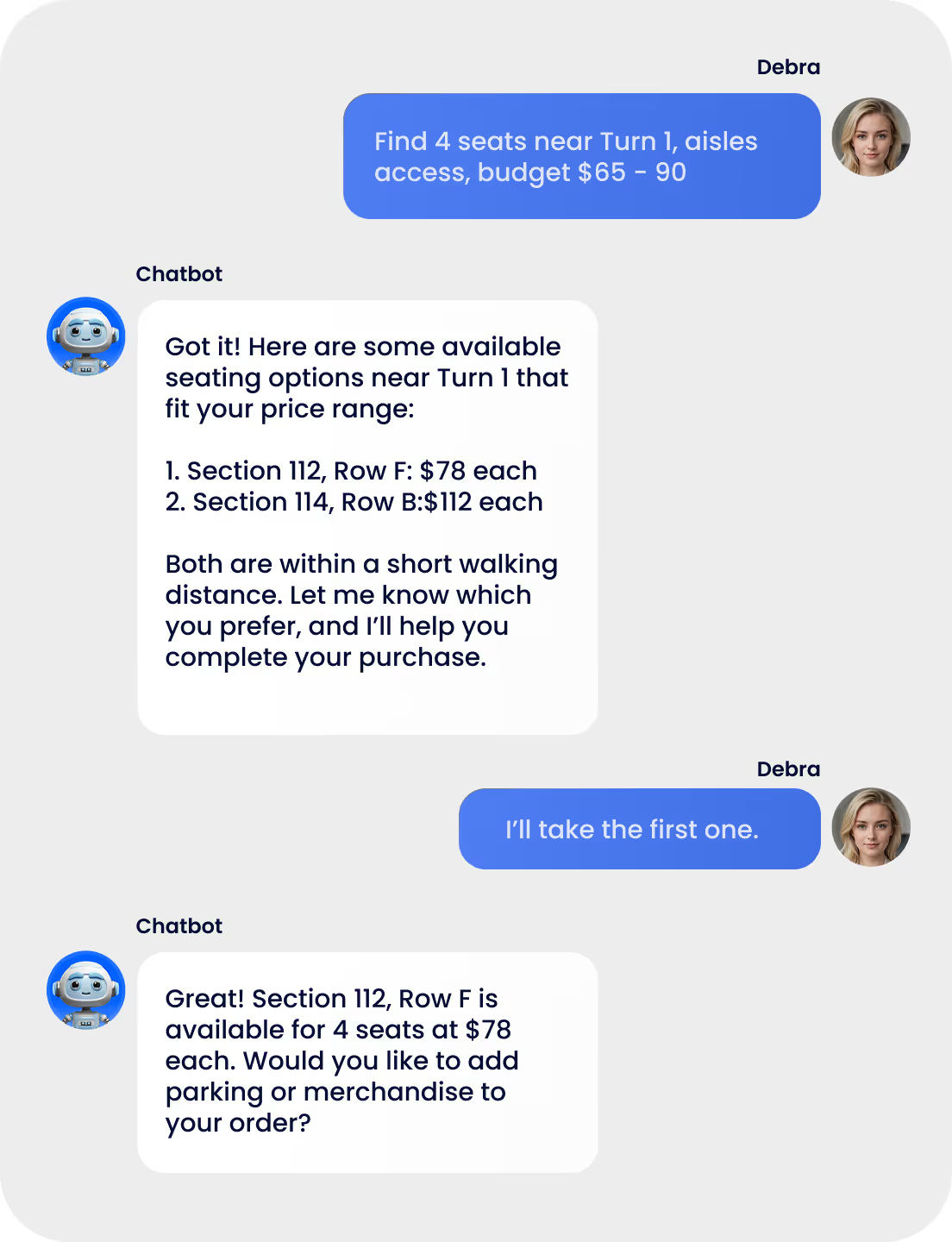

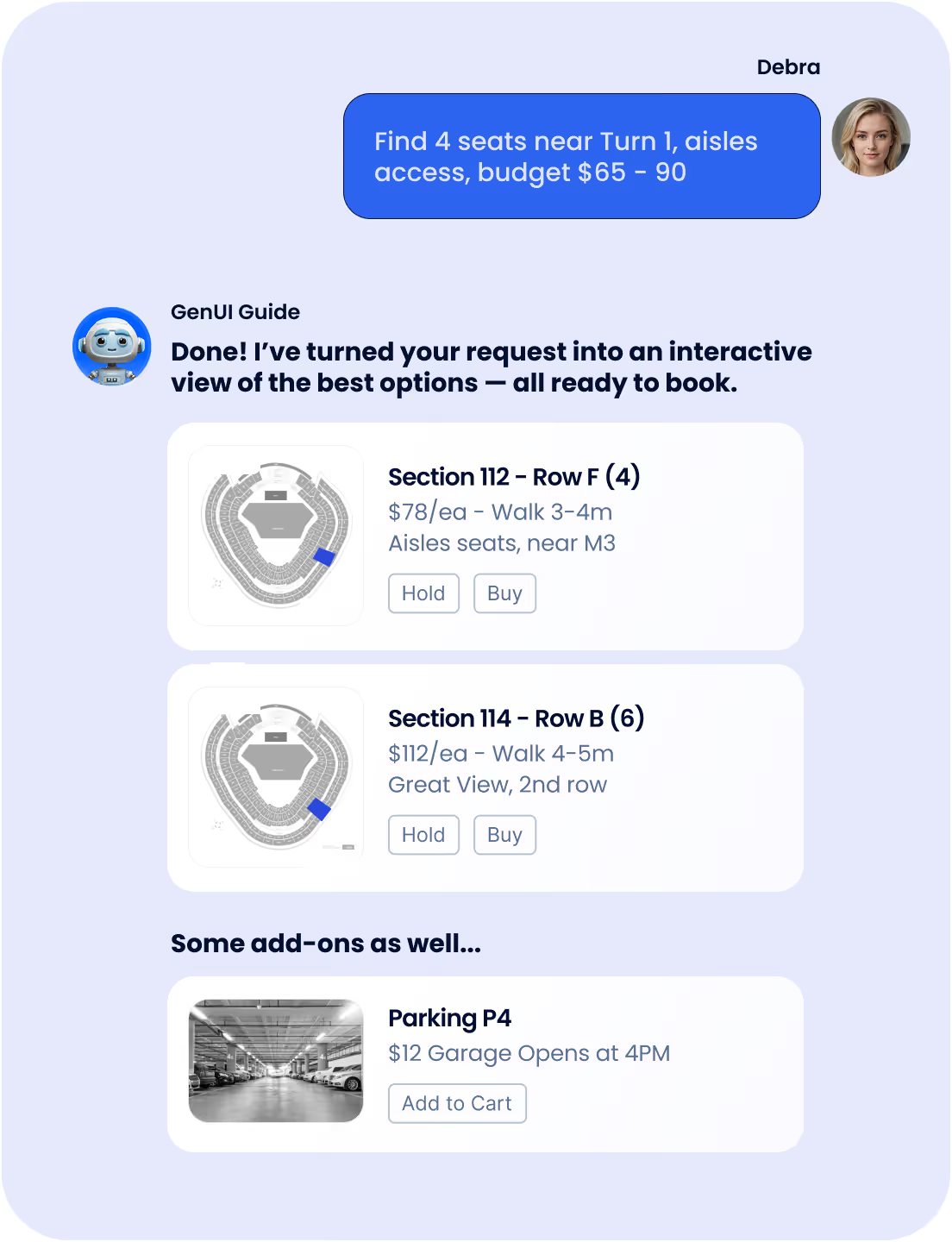

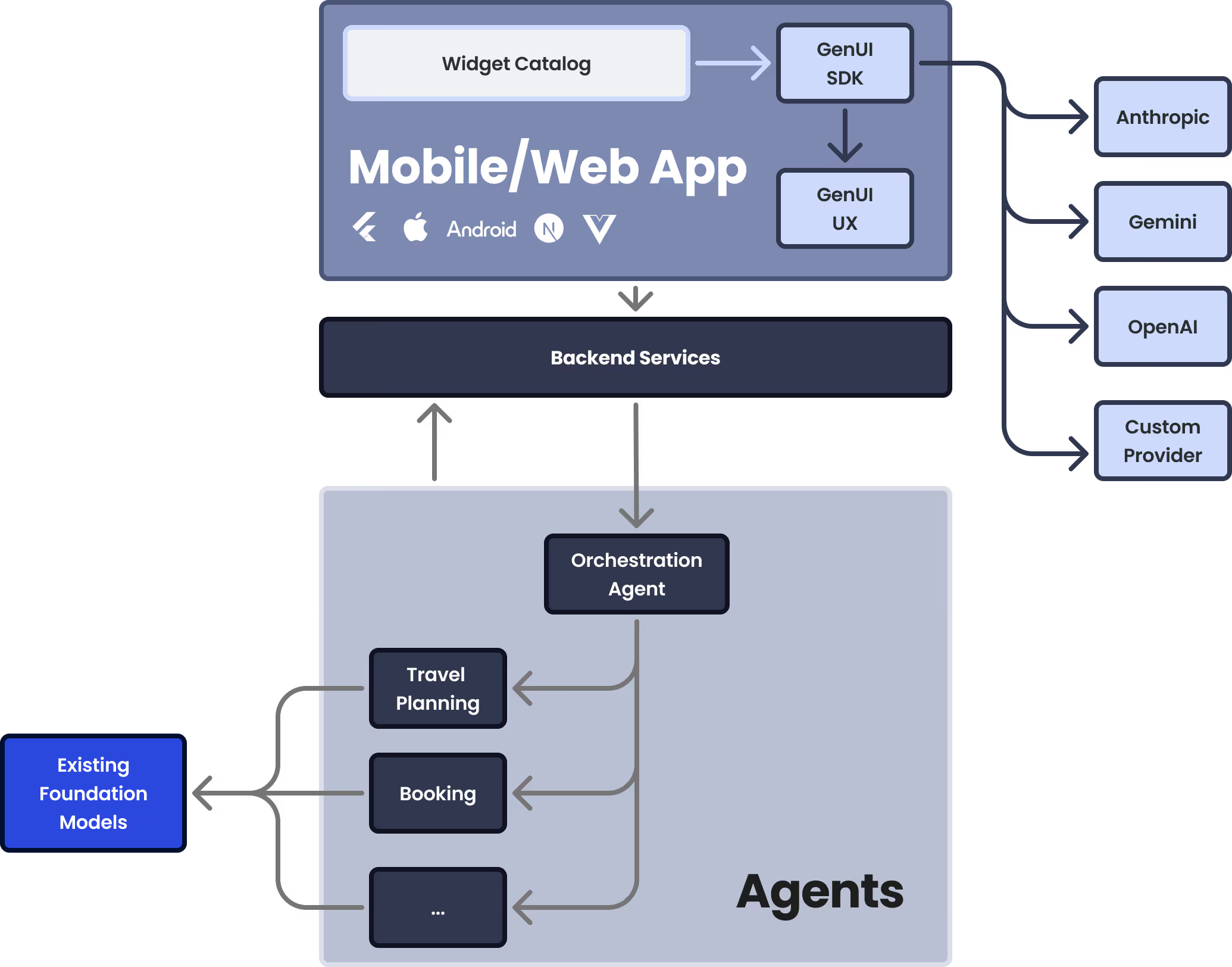

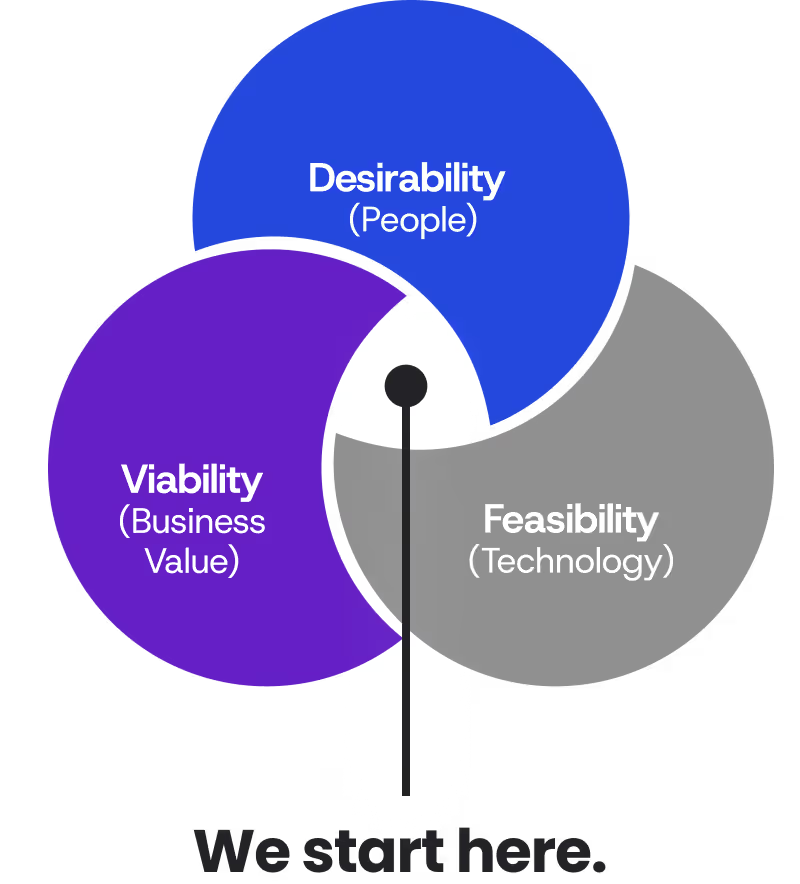

VGV is pioneering a new era of Generative UI—powered by Google’s new GenUI SDK for Flutter—to create responsive, visual interfaces that adapt in real time to user intent. Design elements are dynamically generated with user context in mind, not only making task completion faster and more intuitive, but allowing businesses to guide customers along high-priority journeys.

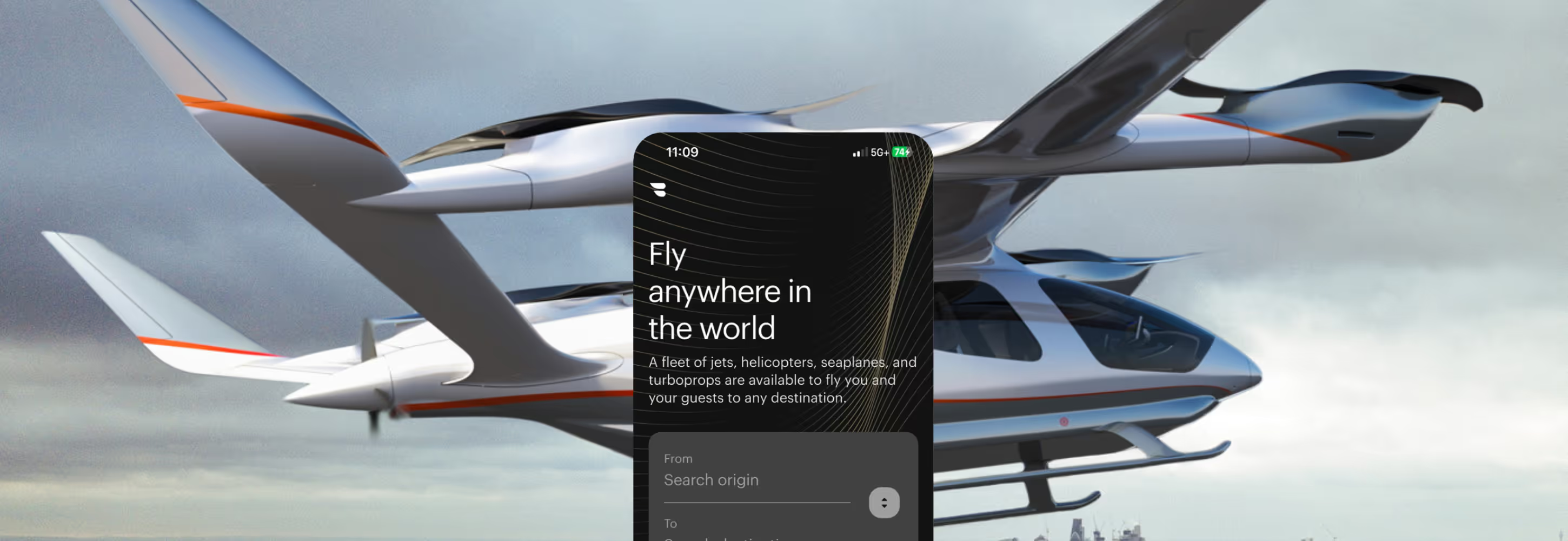

Proven Results Across Industries

Let’s Build an App That Steals the Show

We create the ultimate transformative digital experiences. Let’s see where your vision can go today.

Insights From Our Experts

How to Write a Mobile RFP That Actually Gets You the Right Agency

A practical guide for writing an RFP that attracts strong partners, surfaces real differences, and sets your app up for success.

What is Nostr? The Decentralized Protocol for Developers

The Flip: A Developer's First Look at Nostr, the Protocol That Inverts the Client-Server World

.png)

Get Ready for the Flame Game Jam 2026

The Flame Game Jam 2026 runs from March 6–15, and this year's edition comes with serious prizes: a Mecha Comet device and tickets to Flutter & Friends (among others). Don't miss out!

7 MCP Servers Every Dart & Flutter Developer Should Know

How AI Agents and MCP Servers are Transforming Developer Workflows

.svg)